Mastering MongoDB Aggregation Pipeline for Efficient Data Processing

MongoDB's Aggregation Pipeline is a powerful tool for processing and analyzing data, suitable for both real-time queries and offline data analysis. It allows developers to use multiple stages to transform, filter, group, and sort data, enabling efficient execution of complex computations. This article will explore the basic concepts, application examples, performance analysis, and optimization strategies of the Aggregation Pipeline.

Aggregation Pipeline Basics

The Aggregation Pipeline consists of multiple stages, each responsible for a specific data processing task, such as:

$match: Filters documents, similar to SQL'sWHERE, reducing the amount of data scanned. For example,SELECT * FROM orders WHERE status = 'active';is equivalent to{ "$match": { "status": "active" } }.$group: Groups data and computes aggregate values, similar to SQL'sGROUP BY. For example,SELECT category, COUNT(*) FROM orders GROUP BY category;can be achieved in MongoDB with{ "$group": { "_id": "$category", "count": { "$sum": 1 } } }.$sort: Sorts data, similar to SQL'sORDER BY, for example,SELECT * FROM orders ORDER BY createdAt DESC;is equivalent to{ "$sort": { "createdAt": -1 } }.$project: Adjusts the output fields, similar to SQL'sSELECT column1, column2 FROM table;, which can be implemented in MongoDB with{ "$project": { "name": 1, "price": 1 } }.$lookup: Performs a table join (similar to SQL'sJOIN), for example, SQL'sSELECT * FROM orders INNER JOIN customers ON orders.customerId = customers.id;can be implemented in MongoDB with{ "$lookup": { "from": "customers", "localField": "customerId", "foreignField": "_id", "as": "customer" } }.$unwind: Expands array fields, similar to SQL'sLATERAL VIEW, useful for processing nested data.$merge: Writes the result to a new collection, similar to SQL'sINSERT INTO new_table SELECT * FROM old_table;.

Here is an example of an aggregation: filtering all documents where status is active, grouping them by category, calculating the count of documents in each category, and sorting the results by the count in descending order:

[

{ "$match": { "status": "active" } },

{ "$group": { "_id": "$category", "count": { "$sum": 1 } } },

{ "$sort": { "count": -1 } }

]Aggregation Use Cases

Real-time Data Analysis and Monitoring

In many business scenarios, companies need to monitor and analyze real-time data for quick decision-making. For example, in an e-commerce platform, analyzing user access data in real-time can help optimize recommendation systems, while in the financial industry, transaction monitoring can be used for fraud detection.

The Aggregation Pipeline allows developers to build efficient data

flow processing systems. For example, using $match to

filter specific transaction types and combining $group to

calculate statistics can enable real-time monitoring of abnormal

transactions. For instance:

[

{ "$match": { "transactionAmount": { "$gt": 10000 } } },

{ "$group": { "_id": "$userId", "totalSpent": { "$sum": "$transactionAmount" } } },

{ "$sort": { "totalSpent": -1 } }

]This Aggregation Pipeline monitors high-value transactions in real-time, sorting users' spending behavior, and helping identify suspicious transactions.

Data ETL and Pre-aggregation

For data warehouse and big data analysis scenarios, the Aggregation Pipeline can be used for data extraction (ETL) and pre-aggregation, reducing query overhead and improving performance. For example, a social media platform may need to analyze historical user behavior data to generate personalized recommendations.

A typical ETL task may include:

- Using

$lookupto join data from multiple collections, such as user behavior logs and product information. - Filtering invalid data to reduce storage pressure.

- Using

$groupto aggregate calculations and generate precomputed data tables. - Using

$mergeto store the data into a new collection for future queries.

[

{ "$match": { "eventType": { "$in": ["click", "purchase"] } } },

{ "$group": { "_id": "$userId", "interactions": { "$push": "$eventType" } } },

{ "$merge": "user_behavior_summary" }

]With such an Aggregation Pipeline, businesses can pre-compute user behavior characteristics, reducing the computational pressure during online queries and improving query performance. This approach is especially suitable for large-scale data processing, such as recommendation systems, ad targeting optimization, and user behavior analysis.

Practical Aggregation Sample

In this section, we will use a complete example to demonstrate how Aggregation works.

Data Preparation

Before we begin the Aggregation demonstration, we need to prepare an

example database called sales_db, which contains a

collection called orders with the following structure:

{

"order_id": 1,

"customer": "Alice",

"items": [

{ "product": "Laptop", "price": 1000, "quantity": 1 },

{ "product": "Mouse", "price": 50, "quantity": 2 }

],

"total": 1100,

"date": ISODate("2024-03-10T10:00:00Z")

}Insert some sample data using the mongo shell (including the

orders and customers collections):

> use sales_db

> db.orders.insertMany([

{

"order_id": 1,

"customer": "Alice",

"items": [

{ "product": "Laptop", "price": 1000, "quantity": 1 },

{ "product": "Mouse", "price": 50, "quantity": 2 }

],

"total": 1100,

"date": new ISODate("2024-03-10T10:00:00Z")

},

{

"order_id": 2,

"customer": "Bob",

"items": [

{ "product": "Monitor", "price": 300, "quantity": 1 },

{ "product": "Keyboard", "price": 80, "quantity": 1 }

],

"total": 380,

"date": new ISODate("2024-03-12T14:30:00Z")

},

{

"order_id": 3,

"customer": "Charlie",

"items": [

{ "product": "Tablet", "price": 500, "quantity": 1 },

{ "product": "Headphones", "price": 100, "quantity": 2 }

],

"total": 700,

"date": new ISODate("2024-03-15T09:45:00Z")

},

{

"order_id": 4,

"customer": "David",

"items": [

{ "product": "Smartphone", "price": 900, "quantity": 1 },

{ "product": "Charger", "price": 30, "quantity": 1 }

],

"total": 930,

"date": new ISODate("2024-03-18T12:10:00Z")

},

{

"order_id": 5,

"customer": "Alice",

"items": [

{ "product": "Laptop", "price": 1200, "quantity": 1 },

{ "product": "Mouse Pad", "price": 20, "quantity": 1 }

],

"total": 1220,

"date": new ISODate("2024-03-20T15:30:00Z")

}

]);

> db.customers.insertMany([

{ "_id": 1, "name": "Alice", "email": "alice@example.com", "phone": "123-456-7890" },

{ "_id": 2, "name": "Bob", "email": "bob@example.com", "phone": "234-567-8901" },

{ "_id": 3, "name": "Charlie", "email": "charlie@example.com", "phone": "345-678-9012" },

{ "_id": 4, "name": "David", "email": "david@example.com", "phone": "456-789-0123" }

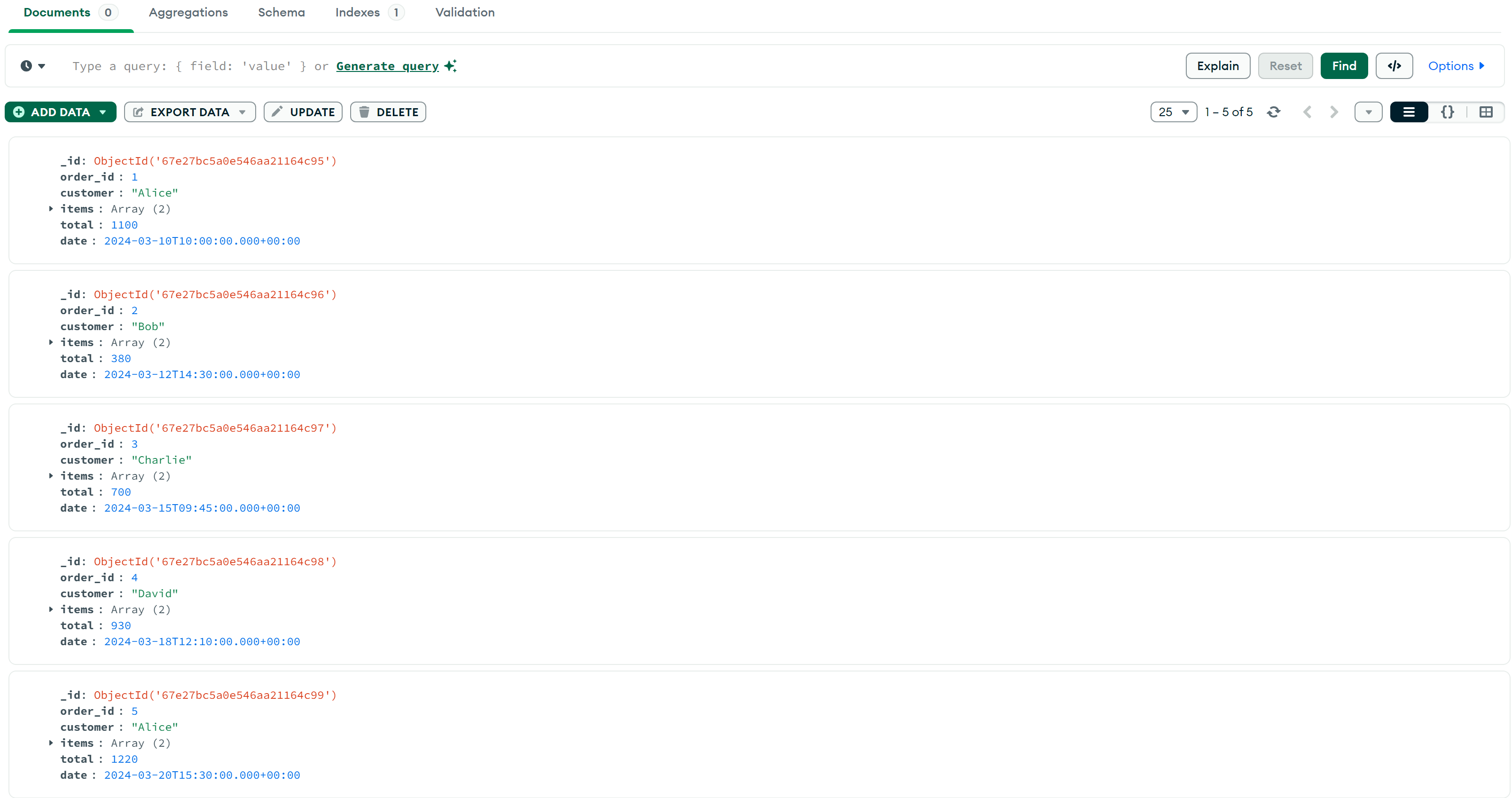

]);After inserting the data, view it using MongoDB Compass:

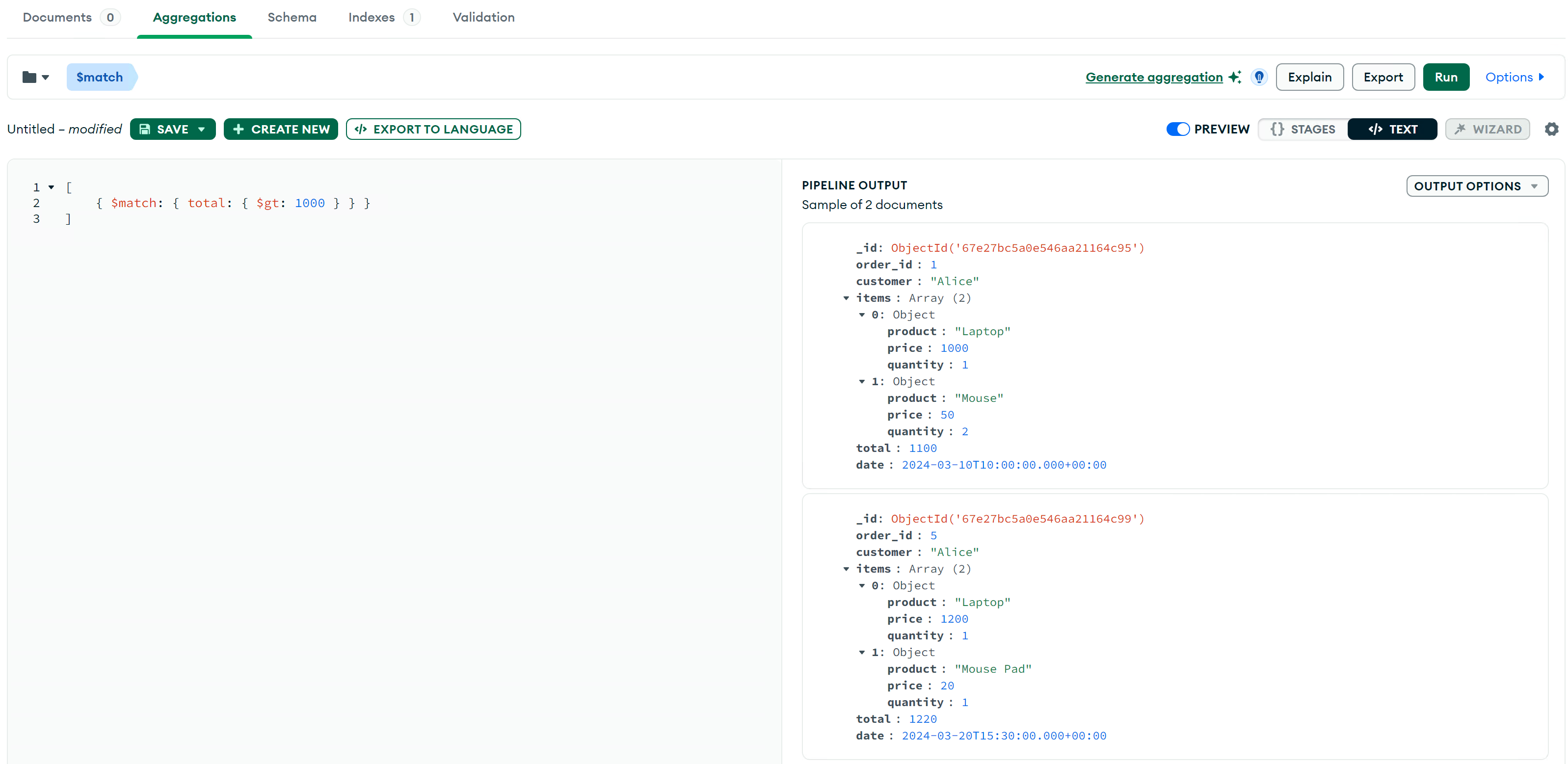

$match Filter Data

Retrieve orders where total is greater than 500:

db.orders.aggregate([

{ $match: { total: { $gt: 1000 } } }

]);The execution result in the Aggregations Tab of Compass (note that only the content within the parentheses should be used):

$group

Group by customer and calculate the total amount spent by each customer:

db.orders.aggregate([

{ $group: { _id: "$customer", total_spent: { $sum: "$total" } } }

]);Results:

{

"_id": "Bob",

"total_spent": 380

}

{

"_id": "Charlie",

"total_spent": 700

}

{

"_id": "Alice",

"total_spent": 2320

}

{

"_id": "David",

"total_spent": 930

}$sort

Sort by total amount spent per customer in descending order:

db.orders.aggregate([

{ $group: { _id: "$customer", total_spent: { $sum: "$total" } } },

{ $sort: { total_spent: -1 } }

]);Results:

{

"_id": "Alice",

"total_spent": 2320

}

{

"_id": "David",

"total_spent": 930

}

{

"_id": "Charlie",

"total_spent": 700

}

{

"_id": "Bob",

"total_spent": 380

}$project

Only display the order_id, customer, and total amount:

db.orders.aggregate([

{ $project: { _id: 0, order_id: 1, customer: 1, total: 1 } }

]);Results:

{

"order_id": 1,

"customer": "Alice",

"total": 1100

}

{

"order_id": 2,

"customer": "Bob",

"total": 380

}

{

"order_id": 3,

"customer": "Charlie",

"total": 700

}

{

"order_id": 4,

"customer": "David",

"total": 930

}

{

"order_id": 5,

"customer": "Alice",

"total": 1220

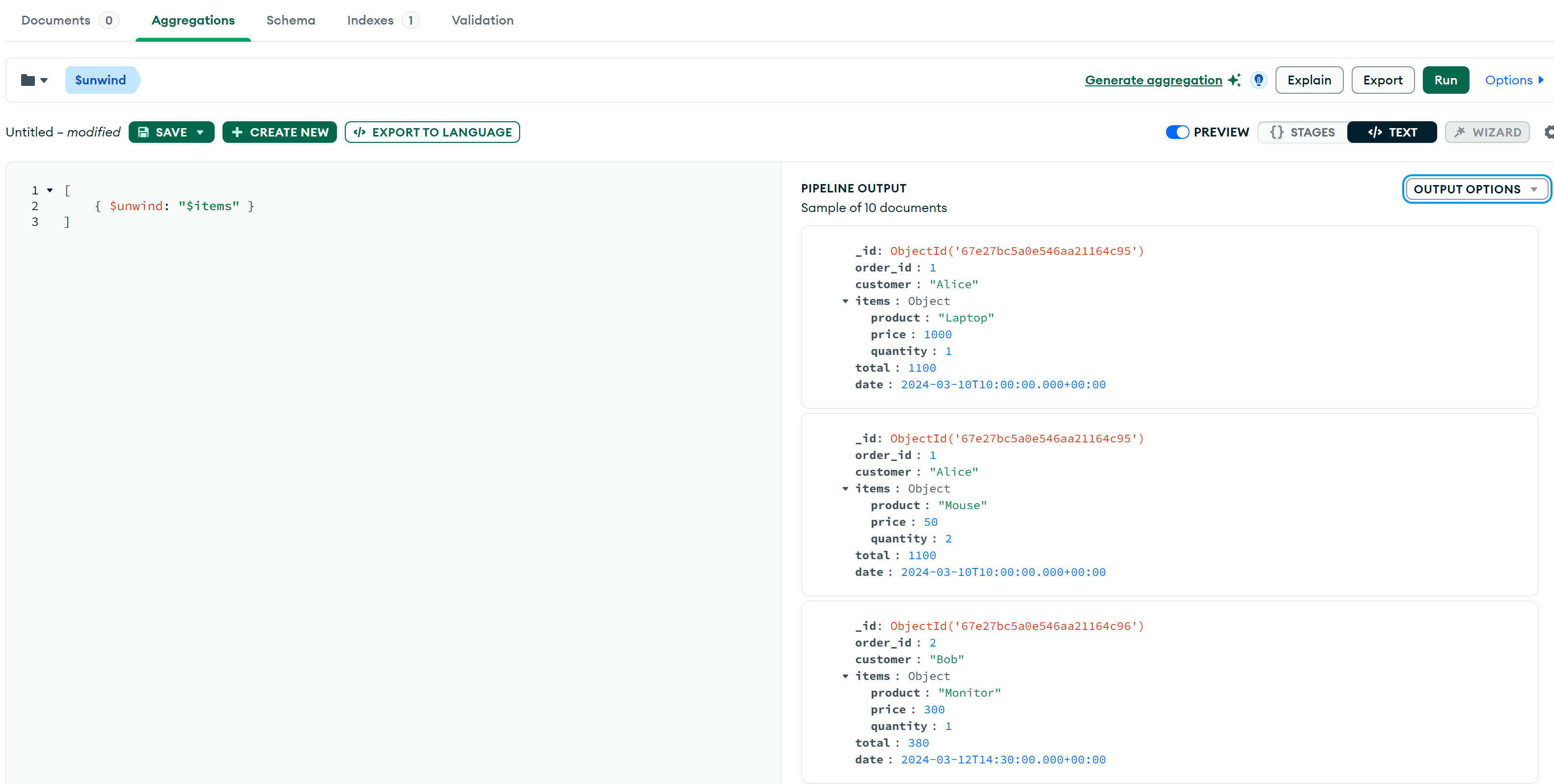

}$unwind

Unwind the items array so that each product in an order

becomes a separate document:

db.orders.aggregate([

{ $unwind: "$items" }

]);Partial results:

$lookup

The customers collection contains detailed information

about customers. We can use $lookup to perform a join-like

operation to associate the data:

db.orders.aggregate([

{

$lookup: {

from: "customers",

localField: "customer",

foreignField: "name",

as: "customer_info"

}

}

]);Partial result, the customer's detailed information is now in the

customer_info:

{

"_id": {

"$oid": "67e27bc5a0e546aa21164c95"

},

"order_id": 1,

"customer": "Alice",

"items": [

{

"product": "Laptop",

"price": 1000,

"quantity": 1

},

{

"product": "Mouse",

"price": 50,

"quantity": 2

}

],

"total": 1100,

"date": {

"$date": "2024-03-10T10:00:00.000Z"

},

"customer_info": [

{

"_id": 1,

"name": "Alice",

"email": "alice@example.com",

"phone": "123-456-7890"

}

]

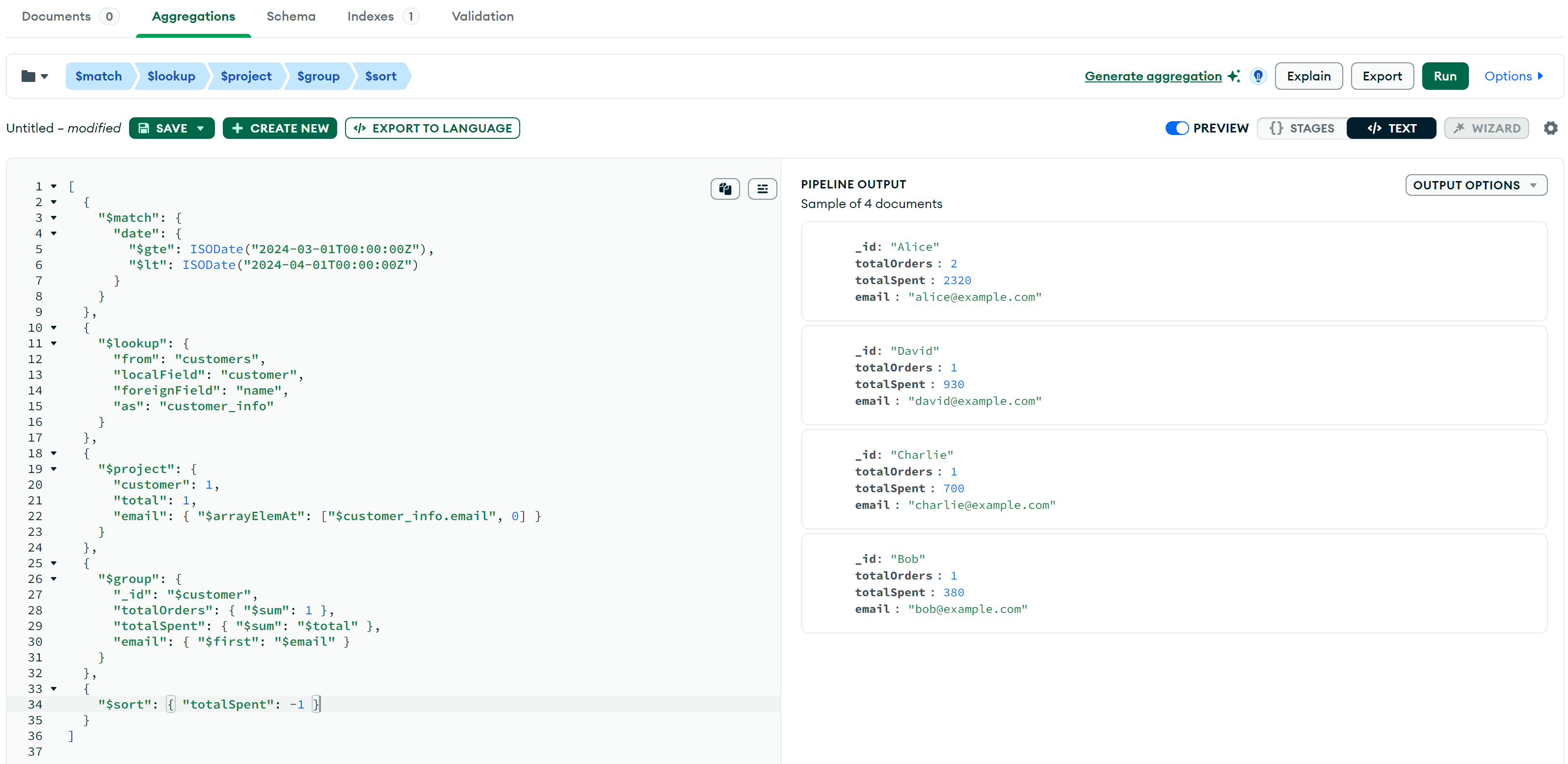

}Combine Together

We can combine multiple stages together to meet complex data analysis requirements. For example, to calculate the total number of orders and total spending for each customer in March 2024, while also retrieving the customer's email and sorting by total spending in descending order:

db.orders.aggregate([

{

"$match": {

"date": {

"$gte": ISODate("2024-03-01T00:00:00Z"),

"$lt": ISODate("2024-04-01T00:00:00Z")

}

}

},

{

"$lookup": {

"from": "customers",

"localField": "customer",

"foreignField": "name",

"as": "customer_info"

}

},

{

"$project": {

"customer": 1,

"total": 1,

"email": { "$arrayElemAt": ["$customer_info.email", 0] }

}

},

{

"$group": {

"_id": "$customer",

"totalOrders": { "$sum": 1 },

"totalSpent": { "$sum": "$total" },

"email": { "$first": "$email" }

}

},

{

"$sort": { "totalSpent": -1 }

}

]);Results:

Performance Analysis and Optimization

Performance Analysis

Methods to analyze the performance of the Aggregation Pipeline include:

explain()diagnostic: Usedb.collection.aggregate([...]).explain("executionStats")to analyze the query plan, check index usage, and examine the execution of each stage.- Profiler: Enable the MongoDB Profiler

(

db.setProfilingLevel(2)) to log slow queries and analyze thesystem.profilecollection. Check execution time. - MongoDB Atlas Performance Advisor (if using Atlas): Provides automatic optimization recommendations.

Performance Optimization

In general, the performance of Aggregation is influenced by the

following factors: - Index usage: The $match stage should

make use of indexes as much as possible to reduce the amount of data

scanned. - Stage order: Place $match first to reduce the

amount of data processed in subsequent stages. - Data volume: When

handling large datasets, the pipeline may consume a significant amount

of memory.

Index Optimization

Ensure that $match uses indexes to improve query

efficiency. For example:

db.orders.createIndex({ "status": 1, "createdAt": -1 })Reducing $lookup

Dependency

$lookup can cause performance degradation. Some

optimization strategies include:

- Preprocess data to avoid runtime

JOIN. - Use nested documents to store related data.

Pipeline Stage Order Optimization

Optimize the stages order:

$match- Filter data first to reduce the processing load for subsequent stages.$project- Remove unnecessary fields to reduce overhead.$sort- Use indexing for sorting when appropriate to avoid memory consumption.$group- Only aggregate when necessary.

MongoDB Aggregation Pipeline provides powerful data processing capabilities, suitable for data analysis, ETL, and offline tasks. By using indexes wisely, optimizing the Pipeline structure, and adopting sharding techniques, performance can be significantly improved.