MongoDB Cluster In Kubernetes(5): Enable UserDB TLS and Auth

This is part5, we will use the generated certficates to enable user database TLS and AUTH.

MongoDB Ops Manager Series:

- Install MongoDB Ops Manager

- Create a UserDB ReplicaSet

- Expose UserDB to Public

- Openssl Generates Self-signed Certificates

- Enable UserDB TLS and Auth

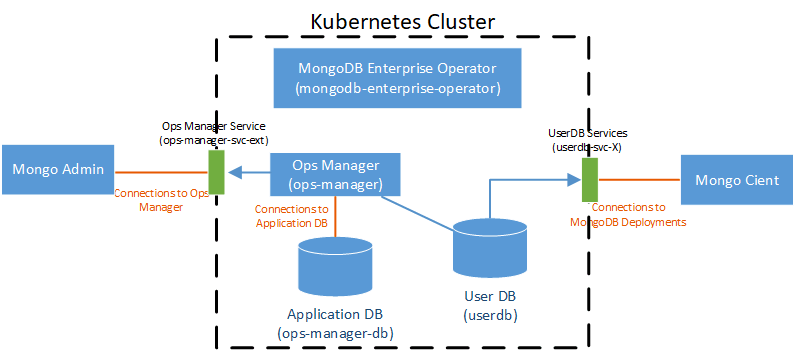

Understanding Different Secure Connections

Before we start, look at the following three TLS:

- Secure Connections to Ops Manager

- Secure Connections to Application Database

- Secure Connections to MongoDB Deployments

A picture is worth a thousand words:

What we want is the last one:

Enable TLS to MongoDB Deployments. Unfortunately, this

configuration cannot be performed through Ops Manger web UI as well.

Because we need to "upload" certificate to each server but the UI does

not has such capability.

If you read the Ops Manager document # Enable TLS for a Deployment:

Get and Install the TLS Certificate on Each MongoDB Host

Acquire a TLS certificate for each host serving a MongoDB process. This certificate must include the FQDN for the hostname of this MongoDB host. The FQDN can be the Common Name or the Subject Alternative Name of this host. You must install this TLS certificate on the MongoDB host.

It's likely that you still don't know how to install the certificate.

Actually, what we want is MongoDB Kubernetes Operator's

documentation: #

Secure Deployments using TLS.

By the way, when I first come to this step, I tried to copy the

certificate to the statefulset pod by kubectl cp. However,

when I kubectl exec to the pod I know it's not

feasible:

groups: cannot find name for group ID 2000

I have no name!@mongo-1:/$ cat > ca.crt

bash: ca.crt: Permission denied

I have no name!@mongo-1:/$ whoami

whoami: cannot find name for user ID 2000

I have no name!@mongo-1:/$The pod is really weird, login with a username/group

2000. I also consider using mount volume to

mount the certificate but not have a try because I found the right

way.

Another article for your reference: # Secure MongoDB Enterprise on Red Hat OpenShift

Upload Certificates to Kubernetes Cluster

Create Server Secret

First, rename the four certficates like this:

| Save As | |

|---|---|

| rootca.crt | ca-pem |

| userdbX.pem | userdb-X-pem |

Tips: End the PEM files with -pem and not

.pem. These files shouldn’t have a file extension.

Three server certificate format:

-----BEGIN CERTIFICATE-----

...

... your TLS certificate

...

-----END CERTIFICATE-----

-----BEGIN RSA PRIVATE KEY-----

...

... your private key

...

-----END RSA PRIVATE KEY----Comparatively, the rootca.crt only have certficate

without private key:

-----BEGIN CERTIFICATE-----

...

... your TLS certificate

...

-----END CERTIFICATE-----Create a secret to store three server certficates:

$ kubectl create secret generic userdb-cert --from-file=userdb-0-pem --from-file=userdb-1-pem --from-file=userdb-2-pem -n mongodb

secret/userdb-cert createdCreate CA ConfigMap

$ kubectl create configmap custom-ca --from-file=ca-pem -n mongodb

configmap/custom-ca createdUpdate User Database

replicaSetHorizons

Remember at Part 3:

To solve the problem, we need to use spec.connectivity.replicaSetHorizons

to specify the public address. However, to use this setting,

spec.security.tls must be enabled first:

security:

tls:

enabled: true

connectivity:

replicaSetHorizons:

- "userdb": "userdb0.com:27017"

- "userdb": "userdb1.com:27017"

- "userdb": "userdb2.com:27017"The bad news is that enable TLS is more complicated than I thought before. Here we use self-signed certificates to encrypt the transport layer communication. Four certificates are required: 1 CA certificate and 3 server certificates.

Now comes to the userdb.yaml and add the

security section:

apiVersion: mongodb.com/v1

kind: MongoDB

metadata:

name: userdb

namespace: mongodb

spec:

members: 3

version: 4.2.2-ent

type: ReplicaSet

opsManager:

configMapRef:

name: ops-manager-connection

credentials: ops-manager-admin-key

security:

tls:

enabled: true

ca: custom-ca

connectivity:

replicaSetHorizons:

- "userdb": "userdb0.com:27017"

- "userdb": "userdb1.com:27017"

- "userdb": "userdb2.com:27017"Tips: the key of replicaSetHorizons

("userdb") is not that important, just keep all the entries have the

same key string.

Apply userdb.yaml:

$ kubectl apply -f userdb.yaml -n mongodb

mongodb.mongodb.com/userdb configured

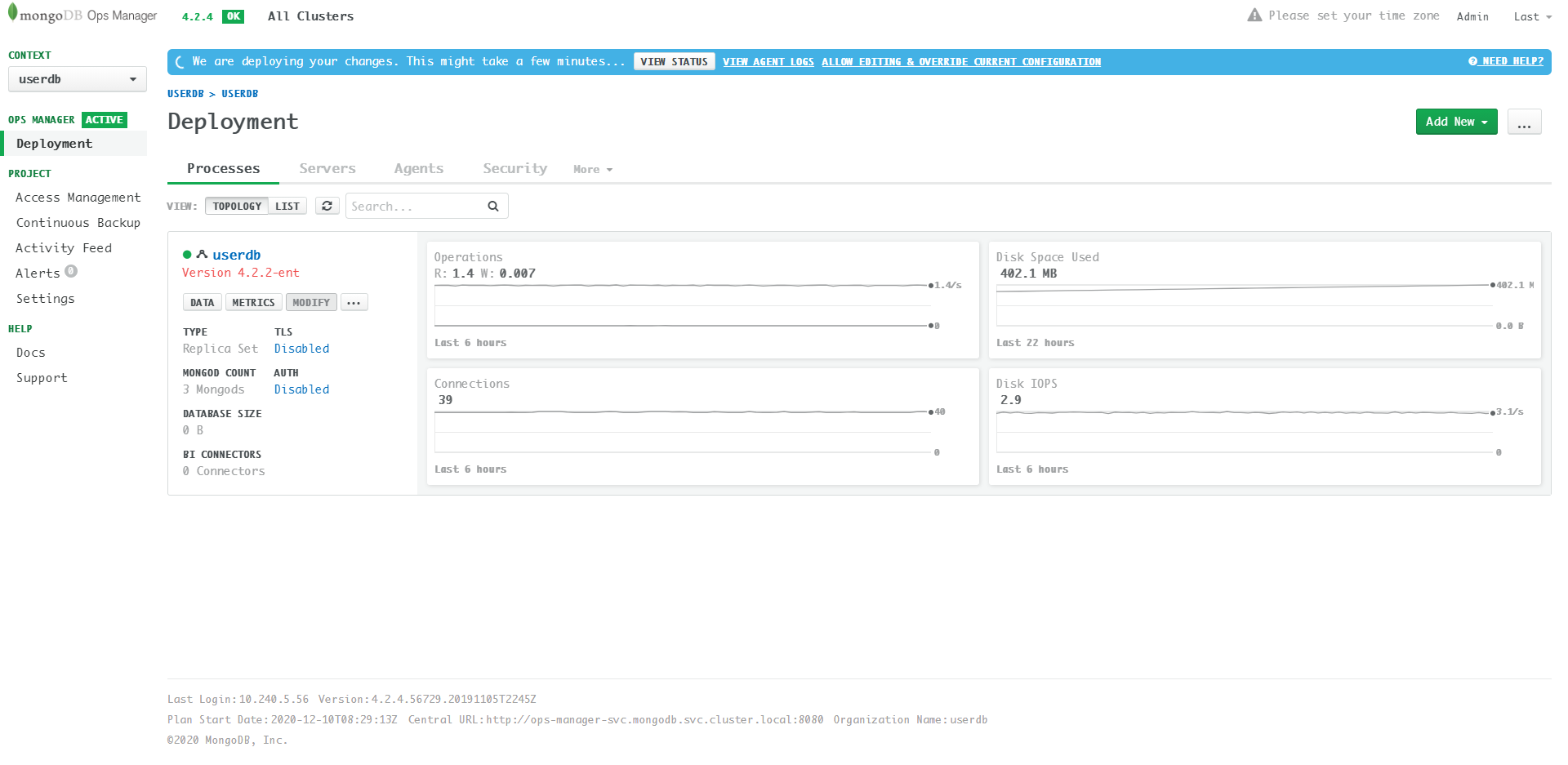

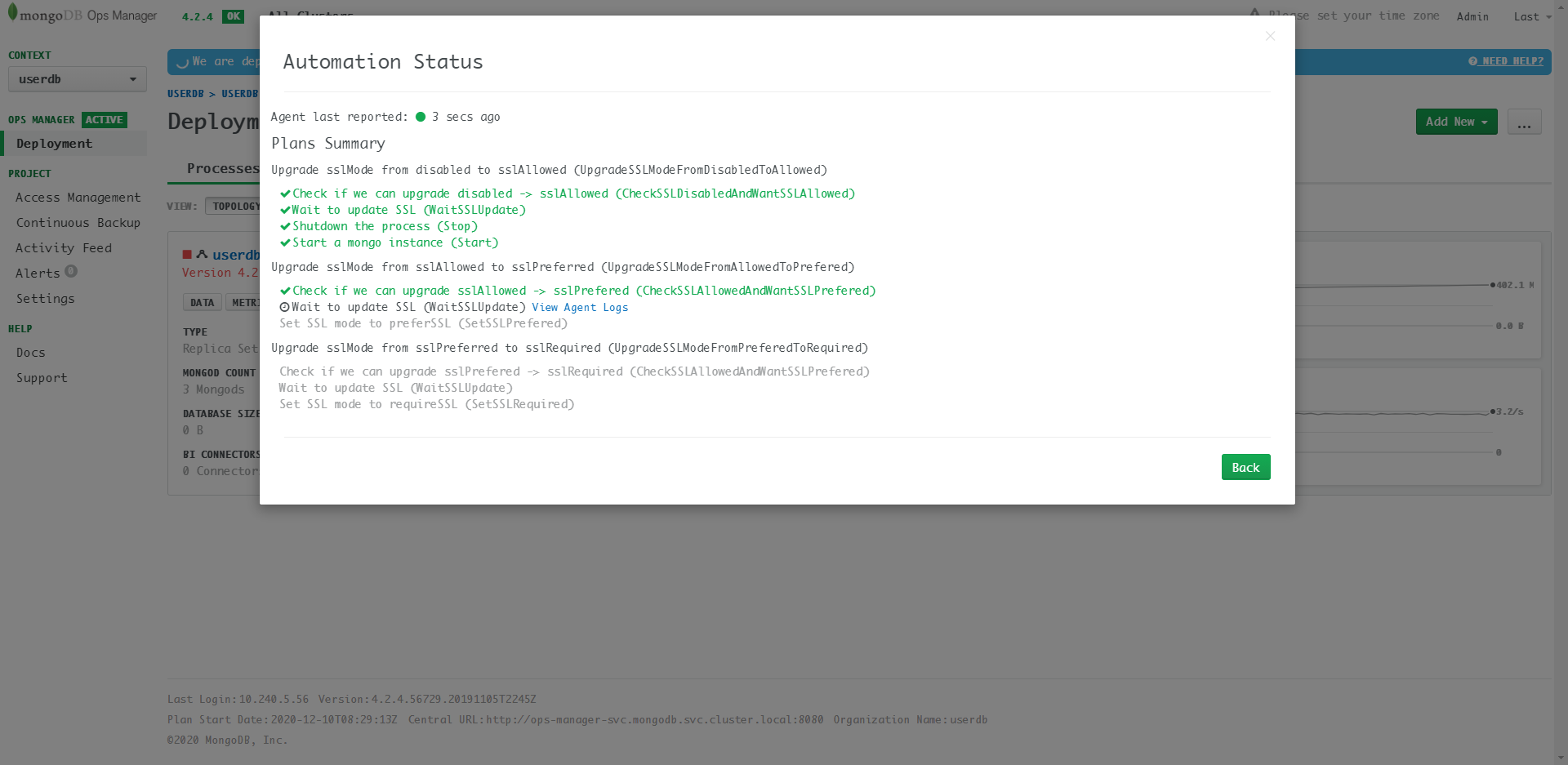

Updating details:

The update needs to be excuted via this path:

disabled -> sslAllowed -> sslPreferred -> sslRequired.

The update process takes a long time (~15mins) and pods restart many

times. When update success, TLS option in the

userdb deployment process UI will become

Enabled.

If you kubectl exec to the pod, the certificate is

stored at /mongodb-automation/:

I have no name!@userdb-0:/$ ls -l /mongodb-automation/

total 8

lrwxrwxrwx 1 2000 root 45 Dec 11 06:25 ca.pem -> /var/lib/mongodb-automation/secrets/ca/ca-pem

drwxr-xr-x 2 2000 root 4096 Dec 11 06:25 files

-rw-r--r-- 1 2000 root 3 Dec 11 06:25 mongodb-mms-automation-agent.pid

lrwxrwxrwx 1 2000 root 54 Dec 11 06:25 server.pem -> /var/lib/mongodb-automation/secrets/certs/userdb-0-pemError calling ComputeState,

Error dialing to connParams,

Error checking if rs member is up Error

If update failed and the Agent Log shows error:

<userdb-1> [03:29:59.660] Failed to compute states : <userdb-1> [03:29:59.660] Error calling ComputeState : <userdb-1> [03:29:59.660] Error getting fickle state for current state : <userdb-1> [03:29:59.660] Error checking if rs member = userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 is up : <userdb-1> [03:29:59.660] Error executing WithClientFor() for cp=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false) connec tMode=SingleConnect : <userdb-1> [03:29:59.660] Error checking out client (0x0) for connParam=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [03:29:59.659] Error dialing to connParams=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 4 identities, but none of them worked. They were (__system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], __system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], mms-automation@admin[[MONGODB-CR/SCRAM-SHA-1]][24], )

Error checking out client (0x0) for connParam=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [03:32:57.470] Error dialing to connParams=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 4 identities, but none of them worked. They were (__system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], __system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], mms-automation@admin[[MONGODB-CR/SCRAM-SHA-1]][24], )

Error getting client ready for conn params = userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false). Informing all requests and disposing of client (0x0). requests=[ 0xc001e58780 ] : [03:32:57.470] Error dialing to connParams=userdb-0.userdb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 4 identities, but none of them worked. They were (__system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], __system@local[[MONGODB-CR/SCRAM-SHA-1 SCRAM-SHA-256]][1024], mms-automation@admin[[MONGODB-CR/SCRAM-SHA-1]][24], )It's high likely that the certficate is not correct, though the error

message has none of certficate's business. Double check that the

certificate contains internal endpoints:

userdb-0.userdb-svc.mongodb.svc.cluster.local. Get back to the previous

step Openssl

Generates Self-signed Certificates, modify

[ alt_names ] in userdb<X>.cnf and

recreate the server certificates.

Conect to UserDB

Now connect to user database via mongo shell, here is a trick that

tlsCAFile is not supported in the connection string, you

must specify it by the command-line

option:

$ mongo mongodb://userdb0.com:27017,userdb1.com:27017,userdb2.com:27017/ --tls --tlsCAFile rootca.crt

MongoDB shell version v4.4.2

connecting to: mongodb://userdb0.com:27017,userdb1.com:27017,userdb2.com:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("fcbaa78a-21dd-472f-a84f-bf3435bf9088") }

MongoDB server version: 4.2.2

WARNING: shell and server versions do not match

---

The server generated these startup warnings when booting:

2020-12-11T06:25:58.940+0000 I STORAGE [initandlisten]

2020-12-11T06:25:58.940+0000 I STORAGE [initandlisten] ** WARNING: Using the XFS filesystem is strongly recommended with the WiredTiger storage engine

2020-12-11T06:25:58.940+0000 I STORAGE [initandlisten] ** See http://dochub.mongodb.org/core/prodnotes-filesystem

2020-12-11T06:26:00.201+0000 I CONTROL [initandlisten]

2020-12-11T06:26:00.201+0000 I CONTROL [initandlisten] ** WARNING: Access control is not enabled for the database.

2020-12-11T06:26:00.201+0000 I CONTROL [initandlisten] ** Read and write access to data and configuration is unrestricted.

2020-12-11T06:26:00.201+0000 I CONTROL [initandlisten]

---

MongoDB Enterprise userdb:PRIMARY>PRIMARY indicates

that the cluster works fine! Run rs.conf() and check the

horizons: MongoDB Enterprise userdb:PRIMARY> rs.conf()

{

"_id" : "userdb",

"version" : 2,

"protocolVersion" : NumberLong(1),

"writeConcernMajorityJournalDefault" : true,

"members" : [

{

"_id" : 0,

"host" : "userdb-0.userdb-svc.mongodb.svc.cluster.local:27017",

...

"horizons" : {

"userdb" : "userdb0.com:27017"

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 1,

"host" : "userdb-1.userdb-svc.mongodb.svc.cluster.local:27017",

...

"horizons" : {

"userdb" : "userdb1.com:27017"

},

"slaveDelay" : NumberLong(0),

"votes" : 1

},

{

"_id" : 2,

"host" : "userdb-2.userdb-svc.mongodb.svc.cluster.local:27017",

...

"horizons" : {

"userdb" : "userdb2.com:27017"

},

"slaveDelay" : NumberLong(0),

"votes" : 1

}

],

...

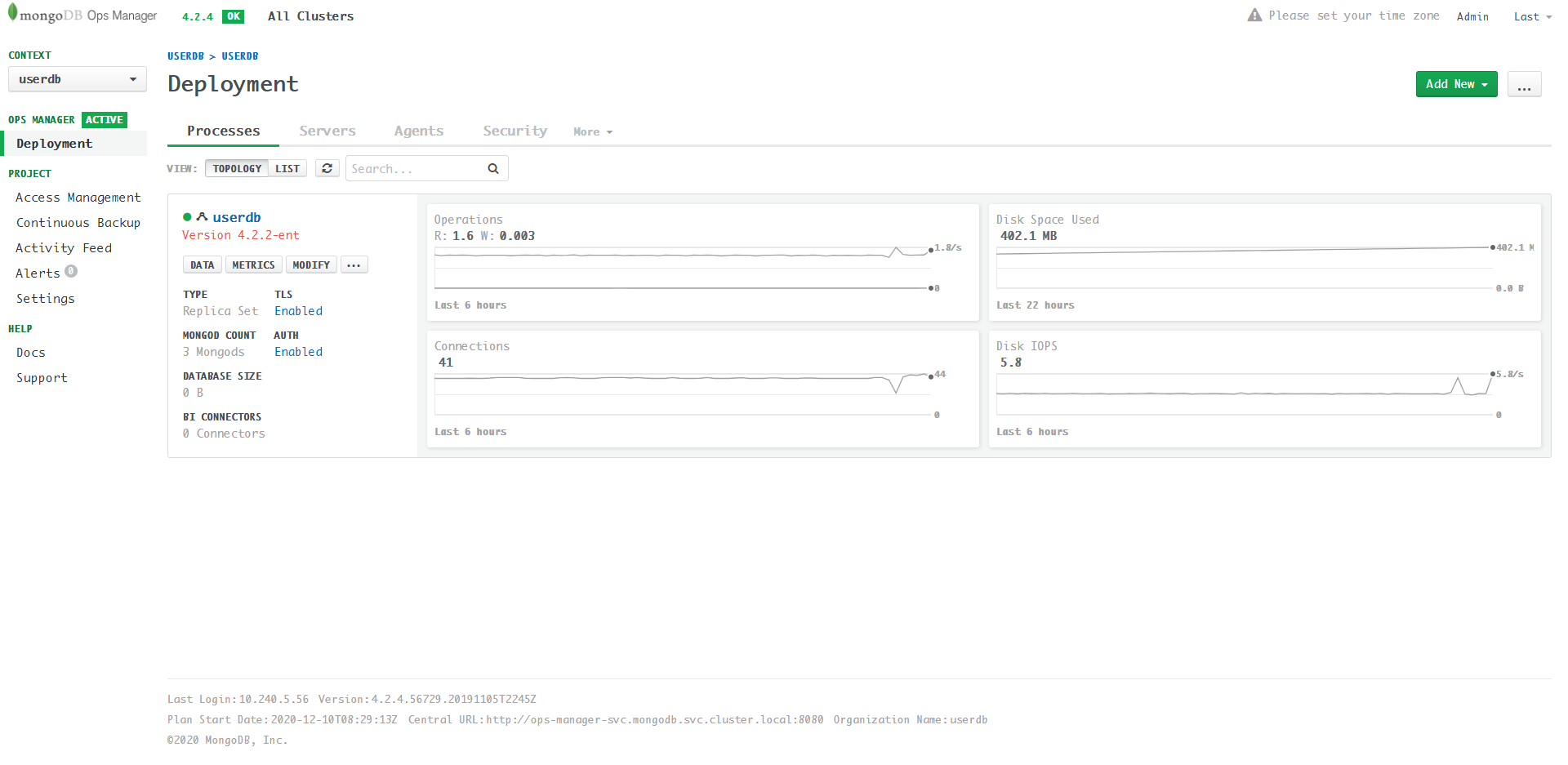

}Enable AUTH

Enable AUTH after TLS is pretty easy. Just click the

Disabled in the Processes UI and follow the guide. Finally,

click the top review & deploy button.

The final Processes UI:

The startup warnings disappear:

$ mongo mongodb://userdb0.com:27017,userdb1.com:27017,userdb2.com:27017/ --tls --tlsCAFile rootca.crt

MongoDB shell version v4.4.2

connecting to: mongodb://userdb0.com:27017,userdb1.com:27017,userdb2.com:27017/?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("5063023a-b76d-4d03-923f-18332e2e7cc9") }

MongoDB server version: 4.2.2

WARNING: shell and server versions do not match

MongoDB Enterprise userdb:PRIMARY>Summary

This series describe how to deploy MongoDB Ops Manager, and use MongoDB Kubernetes Operator to build a MongoDB ReplicaSet. If you found errors please feel free to leave comments below.

Thanks for your time!