部署Kubernetes MongoDB Sharded Cluster

Sharded cluster是MongoDB部署中最复杂的形式,因为Sharded cluster的组件较多,部署步骤也更为繁琐。在实际部署中还有几个部署ReplicaSet时没遇到的证书和TLS问题。阅读本文前,强烈建议先阅读 创建用户数据库(replicaset) 作为基础。

一个Sharded cluster由三部分构成:

- shard server: 存储一部分数据,每个shard可由一个replica set构成。

- mongos: query router,可以认为是整个cluster的前端,客户端通过mongos与cluster交互。

- config server: 存储cluster的metadata。

本文以创建一个2个Shard(每个由3实例ReplicaSet构成),2个mongos及3个config server的sharded cluster为例,演示具体部署流程。本文可做为Kubernetes部署MongoDB集群的番外篇。

整个系列:

- 安装MongoDB Ops Manager

- 创建用户数据库(replicaset)

- 用户数据库服务配置公网访问

- openssl生成自签名CA证书和server证书

- 打开用户数据库TLS通信加密和Auth授权

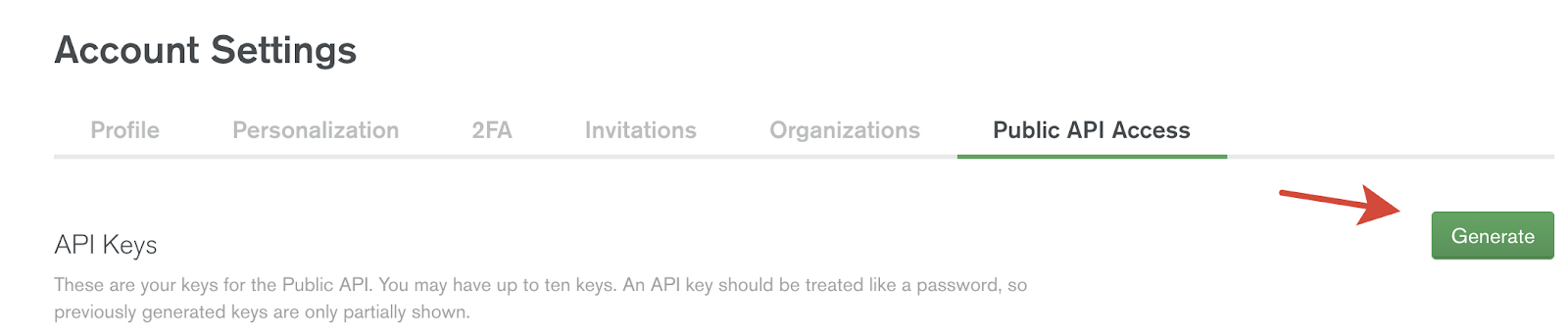

创建一个API Key

如果已有ops-manager-admin-secret,此步可略过。

用户数据库需要有权限访问Ops Manager,所以要生成一个Key。

“UserName -> Account -> Public API

Access”,点页面最右上角的用户名,弹出菜单中有"Account"。

注意这个<apikey>只显示一次,妥善保存。

创建存储APIkey的secret

创建API Key

Secret时使用的用户名要与上面点开的Account用户名一致,这里是ops-manager-admin-secret中的用户名。

$ kubectl create secret generic om-user-credentials --from-literal="user=xxx@xxx.com" --from-literal="publicApiKey=<apikey>" -n mongodb

secret/om-user-credentials created创建Sharded Cluster

创建sharded cluster比较复杂需要三步,原因在于sharded cluster更新TLS的计划生成似乎有BUG,如果像ReplicaSet一样的方式进行部署,会出现TLS打开失败的情况,且死锁无法恢复。具体解释可见最后一节“常见错误”。

创建过程主要分为如下三步:

mongodsPerShardCount设置为1,即每个shard都由一个单机ReplicaSet构成- 配置证书,打开TLS,增加

security部分 mongodsPerShardCount设置为3,将shard变成3成员的ReplicaSet

初始部署

第一步的配置文件sharddb-step1.yaml如下:

apiVersion: mongodb.com/v1

kind: MongoDB

metadata:

name: sharddb

namespace: mongodb

spec:

shardCount: 2

mongodsPerShardCount: 1

mongosCount: 2

configServerCount: 3

version: 4.2.2-ent

type: ShardedCluster

opsManager:

configMapRef:

name: ops-manager-connection

credentials: om-user-credentials

exposedExternally: false

shardPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

configSrvPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

mongosPodSpec:

cpu: "1"

memory: 1Gi注意:

- 这里一定不要加security字段,否则打开了tls会出现部署完成后servers之间互相不能通信,选不出Primary/Secondary节点的问题。即创建时一定是

TLS disabled,配好证书后再将TLS打开。 exposedExternally: false是因为默认暴露服务的方法是NodePort,而这种方式在更新服务的时候可能会影响服务外部地址。所以干脆不用自带暴露地址的方式,而是自己手动写一个service。- shardPodSpec, configSrvPodSpec中可以指定cpu, memory和persistent

volume的大小;mongosPodSpec由于仅可以指定cpu和memory。此外,还可以在同级使用

cpuRequests和memoryRequests设置最小请求值,如果不用这两个参数,默认与前面的最大值一样。参考 [MongoDB Database Resource Specification](https://docs.mongodb.com/kubernetes-operator/master/reference/k8s-operator-specification/)

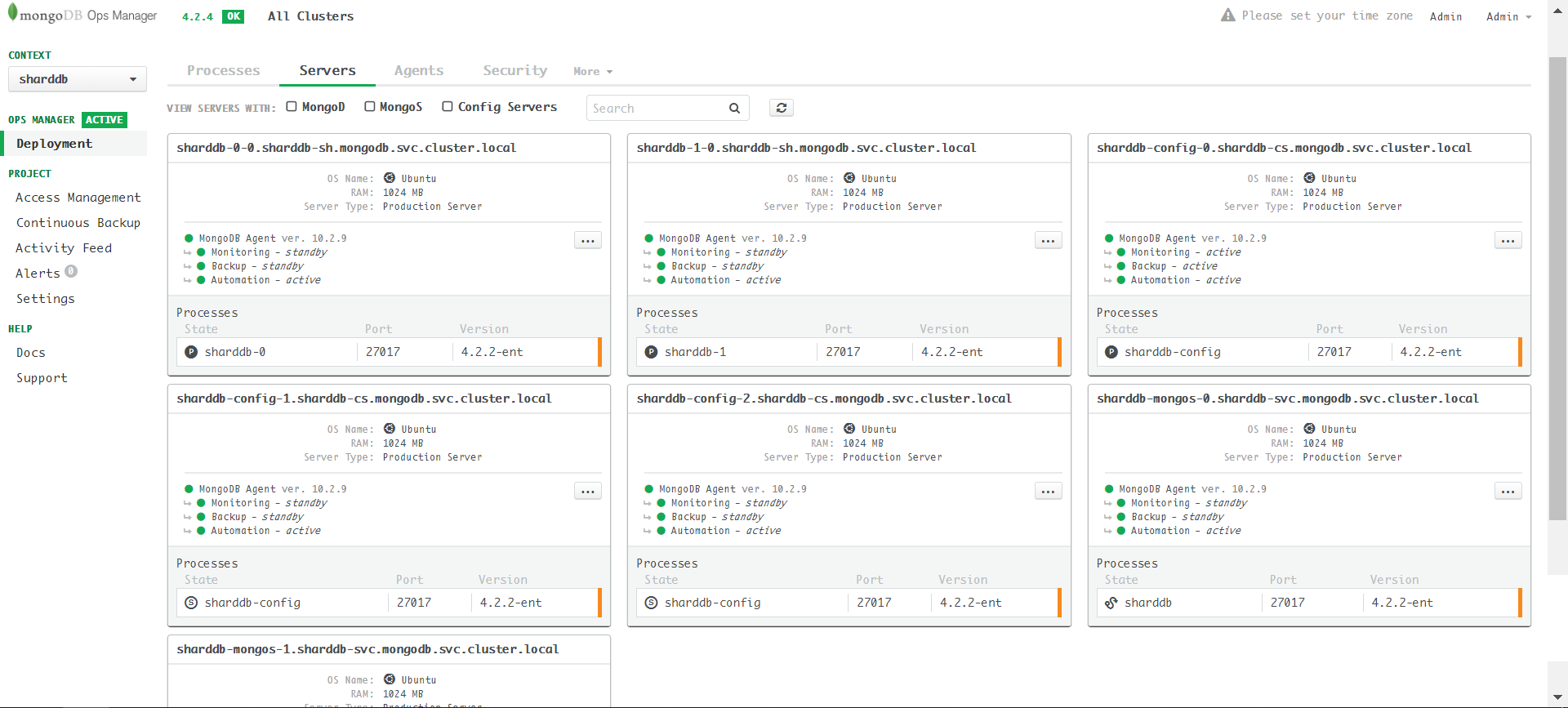

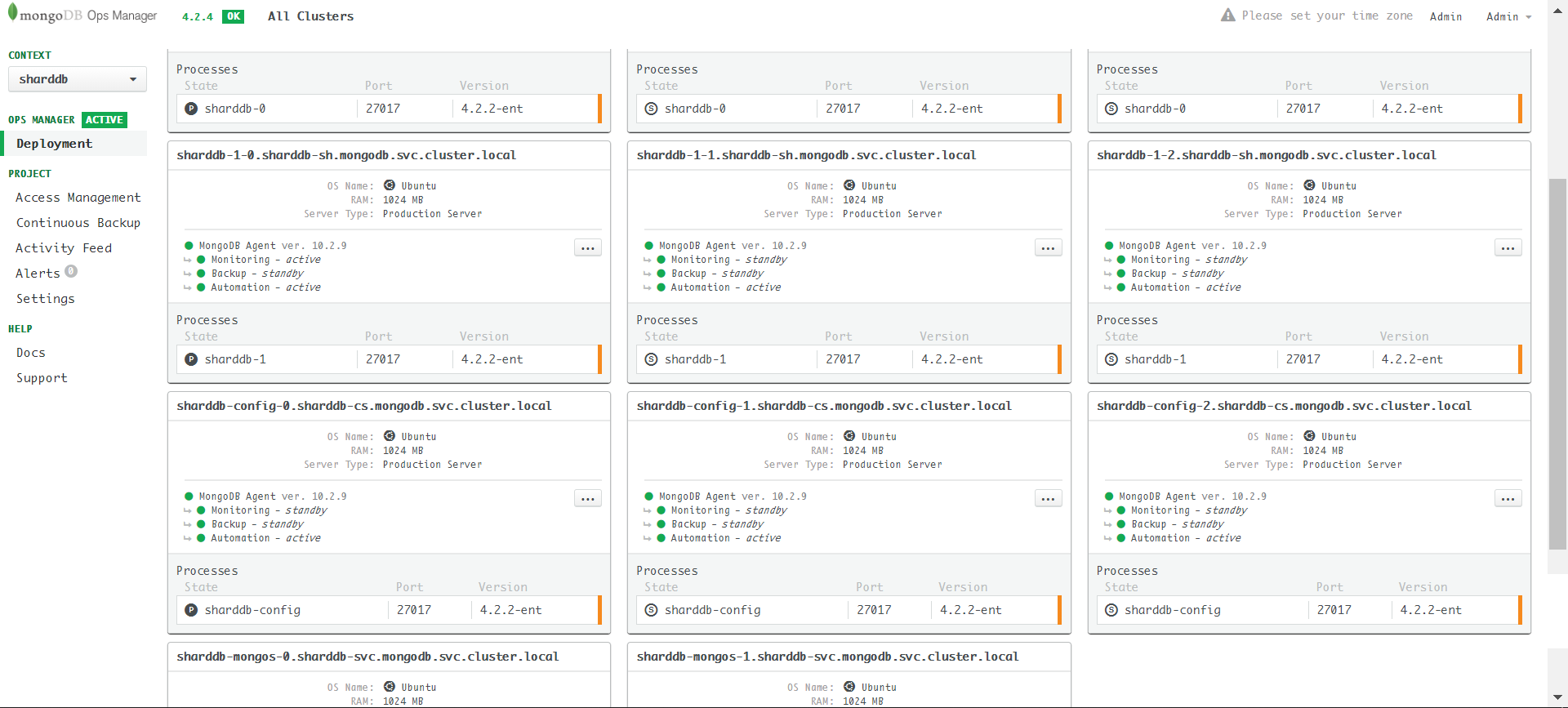

部署完毕之后在Ops Manager中的效果如下,共7个server:

暴露mongos服务

mongos服务暴露与replica set相同,需要将每个mongos都暴露到公网,让client自主选择连接哪个mongos实例,这一点在transaction执行时更为重要,一个transaction的所有事务必须在同一个mongos上执行。参考别在mongos前做负载均衡

直接apply sharddbmongosservice.yaml:

apiVersion: v1

kind: Service

metadata:

name: mongos-svc-0

namespace: mongodb

labels:

app: sharddb-svc

controller: mongodb-enterprise-operator

spec:

type: LoadBalancer

ports:

- protocol: TCP

port: 27017

targetPort: 27017

selector:

app: sharddb-svc

controller: mongodb-enterprise-operator

statefulset.kubernetes.io/pod-name: sharddb-mongos-0

---

apiVersion: v1

kind: Service

metadata:

name: mongos-svc-1

namespace: mongodb

labels:

app: sharddb-svc

controller: mongodb-enterprise-operator

spec:

type: LoadBalancer

ports:

- protocol: TCP

port: 27017

targetPort: 27017

selector:

app: sharddb-svc

controller: mongodb-enterprise-operator

statefulset.kubernetes.io/pod-name: sharddb-mongos-1生成server证书

CA证书生成可以参考:openssl生成自签名CA证书和server证书

配置server.cnf

根据前面的介绍可知我们共有2*3=6个shard server,2个mongos server和3个config server,共11个server。

server.cnf中[ alt_nams ]字段要把所有server的私域地址加上:

sharddb-mongos0.com

sharddb-mongos1.com

sharddb-0-0.sharddb-sh.mongodb.svc.cluster.local

sharddb-0-1.sharddb-sh.mongodb.svc.cluster.local

sharddb-0-2.sharddb-sh.mongodb.svc.cluster.local

sharddb-1-0.sharddb-sh.mongodb.svc.cluster.local

sharddb-1-1.sharddb-sh.mongodb.svc.cluster.local

sharddb-1-2.sharddb-sh.mongodb.svc.cluster.local

sharddb-config-0.sharddb-cs.mongodb.svc.cluster.local

sharddb-config-1.sharddb-cs.mongodb.svc.cluster.local

sharddb-config-2.sharddb-cs.mongodb.svc.cluster.local

sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local

sharddb-mongos-1.sharddb-svc.mongodb.svc.cluster.local其中前两个是mongos的域名,其余的11个是各server的内网地址。为了省事,可以把上面13个地址用通配符写成:

[ alt_names ]

DNS.1 = sharddb-mongos0.com

DNS.2 = sharddb-mongos1.com

DNS.3 = *.sharddb-sh.mongodb.svc.cluster.local

DNS.4 = *.sharddb-cs.mongodb.svc.cluster.local

DNS.5 = *.sharddb-svc.mongodb.svc.cluster.local注意不能写成简写成如下形式:

[ alt_names ]

DNS.1 = sharddb-mongos0.com

DNS.2 = sharddb-mongos1.com

DNS.3 = *.mongodb.svc.cluster.local通配符地址只能作用于一级,参考: # Common SSL Certificate Errors and How to Fix Them

Subdomain SANs are applicable to all host names extending the Common Name by one level. For example: support.domain.com could be a Subdomain SAN for a certificate with the Common Name domain.com advanced.support.domain.com could NOT be covered by a Subdomain SAN in a certificate issued to domain.com, as it is not a direct subdomain of domain.com

server.cnf最终配置如下:

[ req ]

default_bits = 4096

default_md = sha256

distinguished_name = req_dn

req_extensions = v3_req

[ v3_req ]

subjectKeyIdentifier = hash

basicConstraints = CA:FALSE

keyUsage = critical, digitalSignature, keyEncipherment

nsComment = "OpenSSL Generated Certificate for TESTING only. NOT FOR PRODUCTION USE."

extendedKeyUsage = serverAuth, clientAuth

subjectAltName = @alt_names

[ alt_names ]

DNS.1 = sharddb-mongos0.com

DNS.2 = sharddb-mongos1.com

DNS.3 = *.sharddb-sh.mongodb.svc.cluster.local

DNS.4 = *.sharddb-cs.mongodb.svc.cluster.local

DNS.5 = *.sharddb-svc.mongodb.svc.cluster.local

[ req_dn ]

countryName = Country Name (2 letter code)

countryName_default = CN

countryName_min = 2

countryName_max = 2

stateOrProvinceName = State or Province Name (full name)

stateOrProvinceName_default = Beijing

stateOrProvinceName_max = 64

localityName = Locality Name (eg, city)

localityName_default = Beijing

localityName_max = 64

organizationName = Organization Name (eg, company)

organizationName_default = TestComp

organizationName_max = 64

organizationalUnitName = Organizational Unit Name (eg, section)

organizationalUnitName_default = TestComp

organizationalUnitName_max = 64

commonName = Common Name (eg, YOUR name)

commonName_max = 64生成证书

用下面四个命令生成私钥server.key,用rootca.key签名,并生成最终证书文件server.pem:

$ openssl genrsa -out server.key 4096

$ openssl req -new -key server.key -out server.csr -config server.cnf

$ openssl x509 -sha256 -req -days 3650 -in server.csr -CA rootca.crt -CAkey rootca.key -CAcreateserial -out server.crt -extfile server.cnf -extensions v3_req

$ cat server.crt server.key > server.pem示例输出可以参考:openssl生成自签名CA证书和server证书,此处不再赘述。

Copy 11个Server证书

文件名没有扩展名,把server.pem复制11份(rootca除外),命名规则如下:

| File | Save as |

|---|---|

| CA | ca-pem |

| Each shard in your sharded cluster | sharddb-<Y>-<X>-pem |

| Each member of your config server replica set | sharddb-config-<X>-pem |

| Each mongos | sharddb-mongos-<X>-pem |

- Replace

<Y>with a 0-based number for the sharded cluster. - Replace

<X>with the member of a shard or replica set.

创建证书Secret和ConfigMap

创建Shards TLS证书

$ kubectl create secret generic sharddb-0-cert --from-file=sharddb-0-0-pem --from-file=sharddb-0-1-pem --from-file=sharddb-0-2-pem

$ kubectl create secret generic sharddb-1-cert --from-file=sharddb-1-0-pem --from-file=sharddb-1-1-pem --from-file=sharddb-1-2-pem创建ConfigServer TLS证书

$ kubectl create secret generic sharddb-config-cert --from-file=sharddb-config-0-pem --from-file=sharddb-config-1-pem --from-file=sharddb-config-2-pem创建Mongos TLS证书

$ kubectl create secret generic sharddb-mongos-cert --from-file=sharddb-mongos-0-pem --from-file=sharddb-mongos-1-pem创建CA ConfigMap

重命名:rootca.crt -> ca-pem,然后:

kubectl create configmap custom-ca --from-file=ca-pem开启TLS

可参考:# Configure TLS for a Sharded Cluster

与步骤1相比,sharddb-step2.yaml仅增加了最后的security段,注意此时mongodsPerShardCount仍为1:

apiVersion: mongodb.com/v1

kind: MongoDB

metadata:

name: sharddb

namespace: mongodb

spec:

shardCount: 2

mongodsPerShardCount: 1

mongosCount: 2

configServerCount: 3

version: 4.2.2-ent

type: ShardedCluster

opsManager:

configMapRef:

name: ops-manager-connection

credentials: om-user-credentials

exposedExternally: false

shardPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

configSrvPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

mongosPodSpec:

cpu: "1"

memory: 1Gi

security:

tls:

enabled: true

ca: custom-ca注意这里mongodsPerShardCount: 1非常关键,如果不为1,在打开TLS时会出现卡死的情况,看起来是Ops

Manager的BUG,生成的SSL迁移计划有问题。正常迁移计划是同一replica

set的三个server一步步进入到SSL下一状态:disabled -> sslAllowed -> sslPreferred -> sslRequired。而在shard

server的三个实例中,其中一个会率先变成requireTLS,导致另外两个实例不不能连接这个server(以为它已经挂了),为保证高可用性自己也就停止继续更新,整个流程卡死。

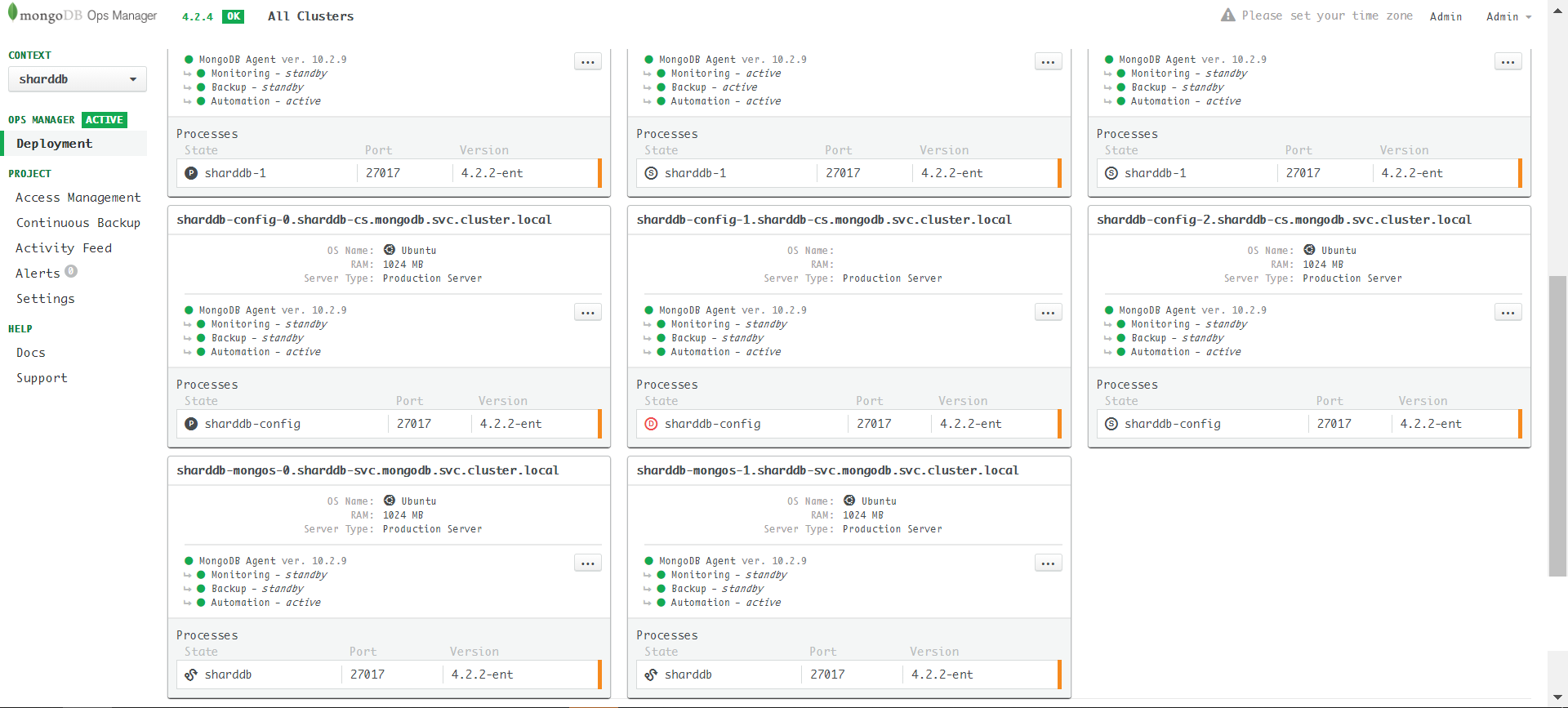

应用sharddb-step2.yaml之后即可开启TLS,可以从Ops

Manager的servers界面中看到server由configServer到shardServer倒序依次更新。

开启TLS成功的状态:

增加shard的mongod实例到多个

与步骤2相比,sharddb-step3.yaml仅将mongodsPerShardCount改为3:

apiVersion: mongodb.com/v1

kind: MongoDB

metadata:

name: sharddb

namespace: mongodb

spec:

shardCount: 2

mongodsPerShardCount: 3

mongosCount: 2

configServerCount: 3

version: 4.2.2-ent

type: ShardedCluster

opsManager:

configMapRef:

name: ops-manager-connection

credentials: om-user-credentials

exposedExternally: false

shardPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

configSrvPodSpec:

cpu: "1"

memory: 1Gi

persistence:

single:

storage: 5Gi

mongosPodSpec:

cpu: "1"

memory: 1Gi

security:

tls:

enabled: true

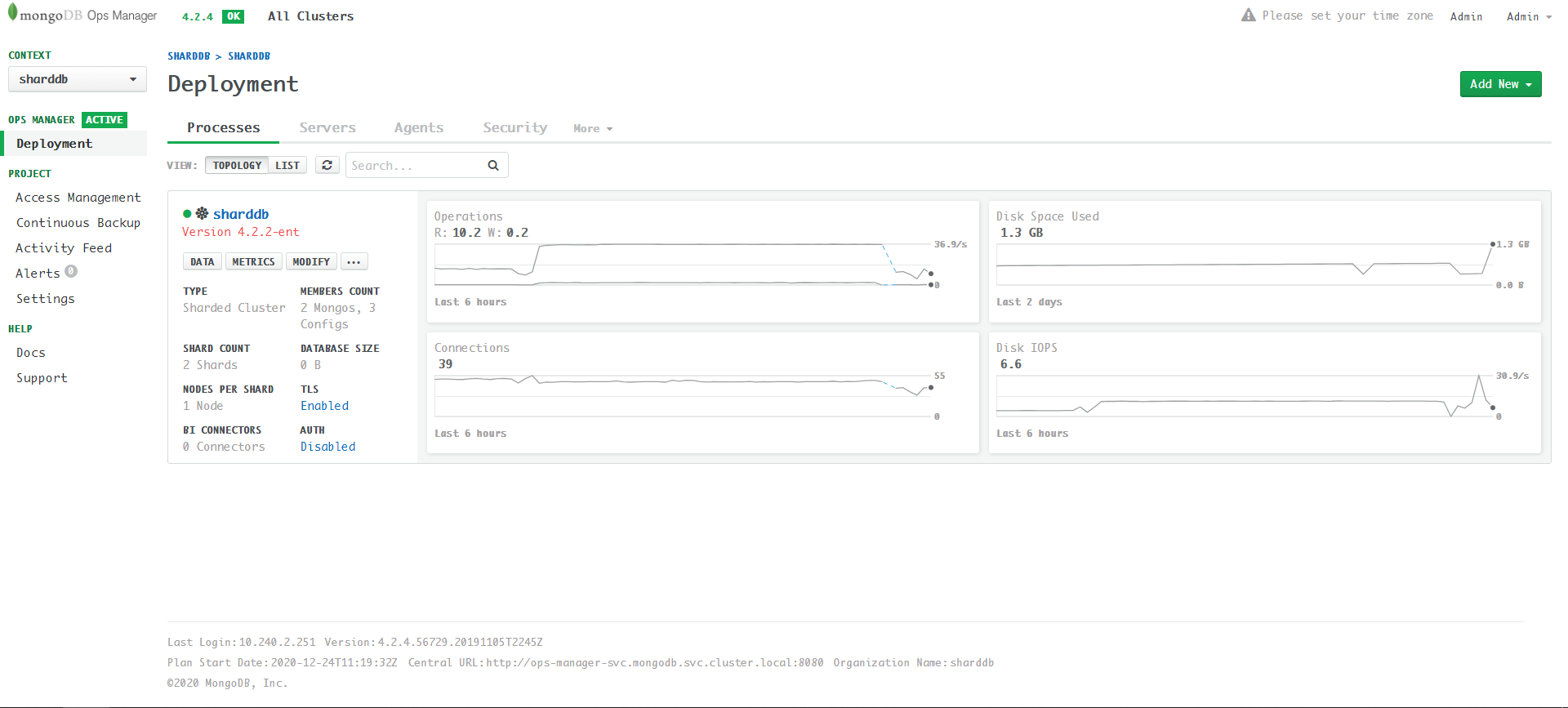

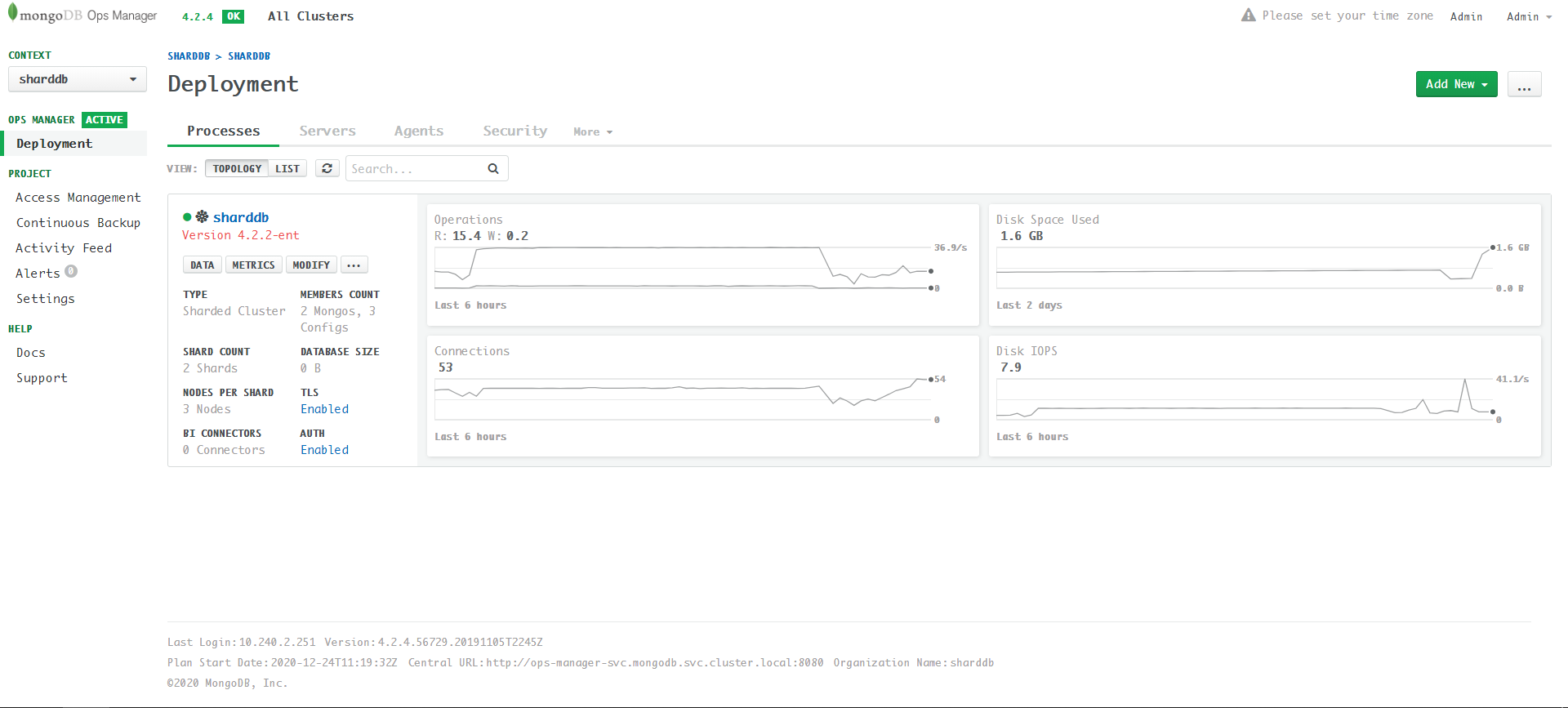

ca: custom-ca应用后最终效果,共11个server:

最终更新成功的状态(界面开启AUTH):

常见错误及处理

证书无效

如果证书配置的SAN不正确,如通配符地址无效,在打开TLS后,Cluster更新时会出现如下错误:

Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were ()

Failed to compute states :

[06:36:25.568] Error calling ComputeState : [06:36:25.568] Error getting fickle state for current state : [06:36:25.568] Error checking if mongos = sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) is up : [06:36:25.568] Error executing WithClientFor() for cp=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were () Failed to planAndExecute :

[06:36:25.568] Failed to compute states : [06:36:25.568] Error calling ComputeState : [06:36:25.568] Error getting fickle state for current state : [06:36:25.568] Error checking if mongos = sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) is up : [06:36:25.568] Error executing WithClientFor() for cp=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were () Error checking if mongos = sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) is up :

[06:36:25.568] Error executing WithClientFor() for cp=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were () Error getting fickle state for current state :

[06:36:25.568] Error checking if mongos = sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) is up : [06:36:25.568] Error executing WithClientFor() for cp=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were () Error calling ComputeState :

[06:36:25.568] Error getting fickle state for current state : [06:36:25.568] Error checking if mongos = sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) is up : [06:36:25.568] Error executing WithClientFor() for cp=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error checking out client (0x0) for connParam=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false) connectMode=SingleConnect : [06:36:25.568] Error dialing to connParams=sharddb-mongos-0.sharddb-svc.mongodb.svc.cluster.local:27017 (local=false): tried 1 identities, but none of them worked. They were () mongod cannot perform Global Update Action because configSvr process = (sharddb-config-2.sharddb-cs.mongodb.svc.cluster.local:27017) is not healthy and done

与ReplicaSet类似,这个问题也是由于证书问题导致的。检查server.cnf中[alt_names]段,看是否配置正确。修正后重新生成11个server证书,并重新部署。

mongodsPerShardCount为3时打开TLS

如果将三步变为两步,即创建cluster时就设定mongodsPerShardCount为3,在更新TLS时就会出现卡死的情况:

具体查一下server上的log会发现,就是前面提到的Ops

Manager生成的SSL迁移计划有问题。迁移计划必须三个server一步步来:

具体查一下server上的log会发现,就是前面提到的Ops

Manager生成的SSL迁移计划有问题。迁移计划必须三个server一步步来:disabled -> sslAllowed -> sslPreferred -> sslRequired。任何一个server步子迈大了,更新就不能继续了。

看一个卡住的shard server的log:

{"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:10.024] [.info] [cm/director/director.go:executePlan:866]

[09:19:10.024] Running step 'WaitSSLUpdate' as part of move 'UpgradeSSLModeFromAllowedToPrefered'"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:10.024] [.info] [cm/director/director.go:tracef:771] [09:19:10.024] Precondition of 'WaitSSLUpdate' applies because "} {"logType":"automation-agent-verbose","contents":"['currentState.Up' = true]"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:10.024] [.info] [cm/director/director.go:planAndExecute:534] [09:19:10.024] Step=WaitSSLUpdate as part of Move=UpgradeSSLModeFromAllowedToPrefered in plan failed : ."} {"logType":"automation-agent-verbose","contents":" Recomputing a plan..."} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:10.132] [.info] [main/components/agent.go:LoadClusterConfig:197] [09:19:10.132] clusterConfig unchanged"} {"logType":"mongodb","contents":"2020-12-26T09:19:11.078+0000 I NETWORK [listener] connection accepted from 10.240.7.82:40502 #76924 (42 connections now open)"} {"logType":"mongodb","contents":"2020-12-26T09:19:11.088+0000 W NETWORK [conn76924] no SSL certificate provided by peer"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:11.142] [.info] [main/components/agent.go:LoadClusterConfig:197] [09:19:11.142] clusterConfig unchanged"} {"logType":"mongodb","contents":"2020-12-26T09:19:11.151+0000 I CONNPOOL [Replication] Connecting to sharddb-0-2.sharddb-sh.mongodb.svc.cluster.local:27017"} {"logType":"automation-agent-stdout","contents":" [09:19:11.158] ... process has a plan : UpgradeSSLModeFromAllowedToPrefered,UpgradeSSLModeFromPreferedToRequired"} {"logType":"automation-agent-stdout","contents":" [09:19:11.158] Running step 'CheckSSLAllowedAndWantSSLPrefered' as part of move 'UpgradeSSLModeFromAllowedToPrefered'"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:11.158] [.info] [cm/director/director.go:computePlan:269] [09:19:11.158] ... process has a plan : UpgradeSSLModeFromAllowedToPrefered,UpgradeSSLModeFromPreferedToRequired"} {"logType":"automation-agent-stdout","contents":" [09:19:11.158] Running step 'WaitSSLUpdate' as part of move 'UpgradeSSLModeFromAllowedToPrefered'"} {"logType":"mongodb","contents":"2020-12-26T09:19:11.161+0000 I REPL_HB [replexec-8] Heartbeat to sharddb-0-2.sharddb-sh.mongodb.svc.cluster.local:27017 failed after 2 retries, response status: HostUnreachable: Connection closed by peer"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:11.158] [.info] [cm/director/director.go:executePlan:866] [09:19:11.158] Running step 'CheckSSLAllowedAndWantSSLPrefered' as part of move 'UpgradeSSLModeFromAllowedToPrefered'"} {"logType":"automation-agent-verbose","contents":"[2020/12/26 09:19:11.158] [.info] [cm/director/director.go:tracef:771] [09:19:11.158] Precondition of 'CheckSSLAllowedAndWantSSLPrefered' applies because "} {"logType":"automation-agent-verbose","contents":"[All the following are true: "} {"logType":"automation-agent-verbose","contents":" ['currentState.Up' = true]"} {"logType":"automation-agent-verbose","contents":" [All the following are true: "} {"logType":"automation-agent-verbose","contents":" [All BOUNCE_RESTART process args are equivalent :"} {"logType":"automation-agent-verbose","contents":" [<'storage.engine' is equal: absent in desiredArgs=map[dbPath:/data], and default value=wiredTiger in currentArgs=map[dbPath:/data engine:wiredTiger]>]"} {"logType":"automation-agent-verbose","contents":" [<'processManagement' is equal: absent in desiredArgs=map[net:map[bindIp:0.0.0.0 port:27017 ssl:map[CAFile:/mongodb-automation/ca.pem PEMKeyFile:/mongodb-automation/server.pem allowConnectionsWithoutCertificates:true mode:requireSSL]] replication:map[replSetName:sharddb-0] sharding:map[clusterRole:shardsvr] storage:map[dbPath:/data] systemLog:map[destination:file path:/var/log/mongodb-mms-automation/mongodb.log]], and default value=map[] in currentArgs=map[net:map[bindIp:0.0.0.0 port:27017 ssl:map[CAFile:/mongodb-automation/ca.pem PEMKeyFile:/mongodb-automation/server.pem allowConnectionsWithoutCertificates:true mode:allowSSL]] processManagement:map[] replication:map[replSetName:sharddb-0] sharding:map[clusterRole:shardsvr] storage:map[dbPath:/data engine:wiredTiger] systemLog:map[destination:file path:/var/log/mongodb-mms-automation/mongodb.log]]>]"} {"logType":"automation-agent-verbose","contents":" ]"} {"logType":"automation-agent-verbose","contents":" [ ]"} {"logType":"automation-agent-verbose","contents":" ]"} {"logType":"automation-agent-verbose","contents":" ['SSL mode' = allowSSL]"} {"logType":"automation-agent-verbose","contents":" ['SSL mode' = requireSSL]"} {"logType":"automation-agent-verbose","contents":"]"}

再来看看已经变成Goal State的一个shard server的log:

{"logType":"mongodb","contents":"2020-12-26T09:33:57.679+0000 I NETWORK [conn348143] end connection 10.240.7.120:51662 (19 connections now open)"} {"logType":"mongodb","contents":"2020-12-26T09:33:57.728+0000 I NETWORK [listener] connection accepted from 10.240.5.150:57414 #348144 (20 connections now open)"} {"logType":"mongodb","contents":"2020-12-26T09:33:57.728+0000 I NETWORK [conn348144] Error receiving request from client: SSLHandshakeFailed: The server is configured to only allow SSL connections. Ending connection from 10.240.5.150:57414 (connection id: 348144)"} {"logType":"mongodb","contents":"2020-12-26T09:33:57.728+0000 I NETWORK [conn348144] end connection 10.240.5.150:57414 (19 connections now open)"} {"logType":"mongodb","contents":"2020-12-26T09:33:57.733+0000 I NETWORK [listener] connection accepted from 10.240.5.150:57416 #348145 (20 connections now open)"} {"logType":"mongodb","contents":"2020-12-26T09:33:57.733+0000 I NETWORK [conn348145] Error receiving request from client: SSLHandshakeFailed: The server is configured to only allow SSL connections. Ending connection from 10.240.5.150:57416 (connection id: 348145)"}

可见就是Goal State的shard

server更新太快变成requireSSL导致另外两个server不能连接的问题。没有找到好的解决办法,能想到的只是通过文中先把每个shard变为1个实例,打开TLS之后再更新为3个。这也是用MongoDB

Ops Manager部署Sharded Cluster最坑的地方。

删除Sharded Cluster

中间任何一步出错,都需要使用下面命令将sharddb删除:

kubectl delete mdb sharddb注意sharddb中的shardServer和configServer都是有状态的,即它们都外接了PV,上面这条命令并不会在Kubernetes中释放PV,下次重建后可能挂载的还是老的PV,可能会出现一些意想不到的错误(如删除前是3个server,重建后只有1个server,但它却还在试图与另外两个server通信出现错误)。因此,除了执行上述命令外,还需要在Kubernetes集群中将PV删除,从而将当前错误状态彻底清除。